电脑的历史简介英文(电脑历史简介英文)

The history of computers is a fascinating journey that spans over several decades, evolving from the rudimentary machines developed in the 1930s to the sophisticated systems we rely on today. This narrative is not just about technological advancement but also reflects societal changes and human ingenuity. In this essay, we will delve into the key milestones that have shaped the evolution of computers, exploring how they have transformed our world.

To begin with, let's outline the main points covered in this article:

1.Early Mechanical Computers (Pre-1940s)

2.The Development of Electronic Computers (Post-1940s)

3.The Rise of Minicomputers and Mainframes (1960s-1970s)

4.The Personal Computer Revolution (1970s-Present)

5.The Modern Age of Cloud Computing and Mobile Devices (21st Century)

Now, let's explore these phases one by one, shedding light on each stage of computer history.

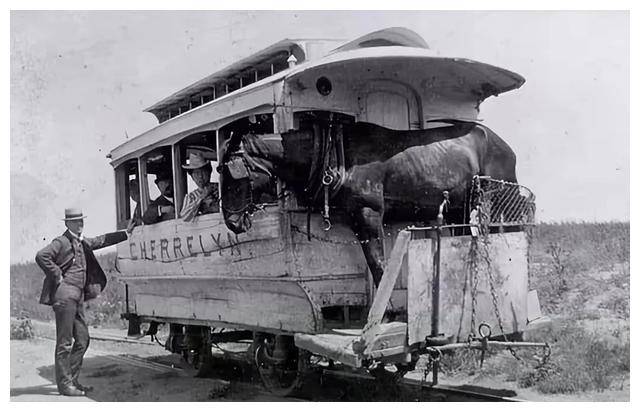

The dawn of computing began long before the advent of electronic circuits. Inventors like Charles Babbage designed mechanical devices in the 1830s, which he referred to as "difference engines." These early machines, though never completed in his lifetime, laid the groundwork for algorithmic processing. Similarly, Ada Lovelace, an English mathematician and writer, is often credited as the first computer programmer due to her work on Charles Babbage's designs.

Fast forward to World War II, and we witness a significant shift towards electronic computers. British mathematician Alan Turing proposed a conceptual machine called the "Turing Machine," which theoretically could perform any computation given sufficient time and memory. This idea became the blueprint for the creation of actual electronic computers. During the war, the German engineer Konrad Zuse invented the Z3, one of the earliest programmable computers, followed by the American ENIAC (Electronic Numerical Integrator and Computer), completed in 194

5.It was capable of performing thousands of calculations per second, revolutionizing scientific research and industrial processes.

After the war, interest in electronic computers grew exponentially, leading to the development of more powerful machines known as minicomputers. Companies like IBM released their System/360 series in 1964, which were faster, more reliable, and easier to use than previous models. These minicomputers became the backbone of business operations across various sectors, from banking to airline reservations.

In the late 1970s, a paradigm shift occurred with the introduction of personal computers (PCs). Apple's Lisa and Apple II, followed by companies like IBM, launched PC-compatible machines. The success of these products democratized computing, making it accessible to individual users. The introduction of graphical user interfaces (GUIs), starting with Xerox PARC's inventions, further eased the interaction with computers, paving the way for widespread adoption.

The 21st century ushered in the era of cloud computing and mobile devices, redefining our relationship with technology. With cloud services such as Amazon Web Services and Google Cloud Platform becoming integral parts of IT infrastructure, data storage and processing capabilities expanded beyond physical limits. Smartphones evolved into powerful tools for communication, entertainment, and productivity, thanks to advancements in semiconductor technology and software ecosystems.

In conclusion, the history of computers is a testament to human creativity and relentless pursuit of efficiency. From mechanical contraptions to quantum computers, each stage represents a leap forward in understanding and utilizing computational power for better living standards and scientific discovery. As we move forward, the possibilities seem endless, promising even more innovations that will continue shaping our future.